Building trust in AI

decisions

The TRUST Framework is an operational backbone turning Responsible AI into a measurable, actionable reality. By focusing on Transparency, Robustness, Unbiased outcomes, Security, and Testing, the TRUST Framework provides a rigorous standard for evaluating and building AI systems that inspire confidence and ensure long-term sustainability, offering a clear pathway to implement and validate Responsible AI across all parts of the ecosystem.

Latest News

Data Visualization team publishes blogpost on benchmarking AI Models for scatterplot-related tasks

Pedro Bizarro presents keynote at the Center for Responsible AI Forum 2025

João Palmeiro presents at VIS2025’s 1st Workshop on GenAI, Agents, and the Future of VIS

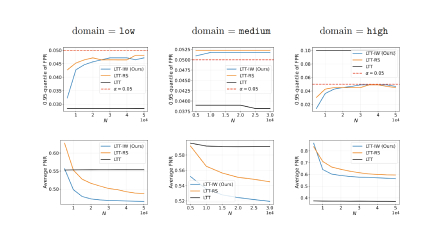

Ricardo Ribeiro gives a presentation about Transfer Learning at the DSPT meetup in Lisbon.

Ricardo Ribeiro presents our evaluation framework for transfer learning on ECML 2025.

Our AI team presents at the ECML PhD Forum.

Data Visualization team publishes blogpost on benchmarking AI Models for scatterplot-related tasks

Pedro Bizarro presents keynote at the Center for Responsible AI Forum 2025

João Palmeiro presents at VIS2025’s 1st Workshop on GenAI, Agents, and the Future of VIS

Ricardo Ribeiro gives a presentation about Transfer Learning at the DSPT meetup in Lisbon.

Ricardo Ribeiro presents our evaluation framework for transfer learning on ECML 2025.

Recent Blog Posts

Benchmark It Yourself (BIY): Preparing a Dataset and Benchmarking AI Models for Scatterplot-Related Tasks

When we need to visualize and interact with millions, or even just thousands, of individual points while analyzing data, we typically resort to rendering them in the browser using a canvas.

João Palmeiro

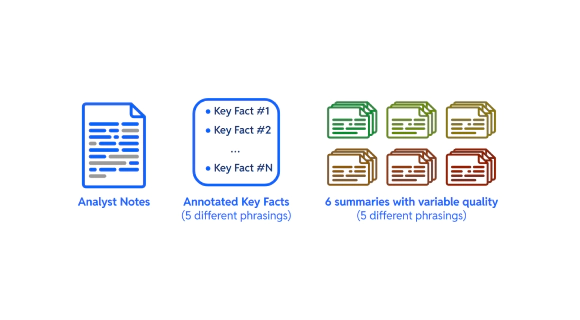

Benchmarking LLMs in Real-World Applications: Pitfalls and Surprises

"Are newer LLMs better?", In this post, Jean Vieira Alves and Ferran Pla Fernández explore that question with rigorous benchmarking work. Spoiler/hint: recall Betteridge's law of headlines ("Any headline that ends in a question mark can be answered by the word no.")

Jean V. Alves and Ferran Pla Fernández

Causal Concept-Based Explanations

Over the years, we have evolved from using simple, often rule-based algorithms to sophisticated machine learning models. These models are incredibly good at finding patterns in large datasets, but due to their complexity it is frequently challenging for a human to understand why a certain input leads to its respective output. This is especially problematic in areas where high-stakes decisions are being made and where human-AI collaboration is critical.

Jacopo Bono

Benchmark It Yourself (BIY): Preparing a Dataset and Benchmarking AI Models for Scatterplot-Related Tasks

When we need to visualize and interact with millions, or even just thousands, of individual points while analyzing data, we typically resort to rendering them in the browser using a canvas.

João Palmeiro

Benchmarking LLMs in Real-World Applications: Pitfalls and Surprises

"Are newer LLMs better?", In this post, Jean Vieira Alves and Ferran Pla Fernández explore that question with rigorous benchmarking work. Spoiler/hint: recall Betteridge's law of headlines ("Any headline that ends in a question mark can be answered by the word no.")

Jean V. Alves and Ferran Pla Fernández

Causal Concept-Based Explanations

Over the years, we have evolved from using simple, often rule-based algorithms to sophisticated machine learning models. These models are incredibly good at finding patterns in large datasets, but due to their complexity it is frequently challenging for a human to understand why a certain input leads to its respective output. This is especially problematic in areas where high-stakes decisions are being made and where human-AI collaboration is critical.

Jacopo Bono

Research Areas

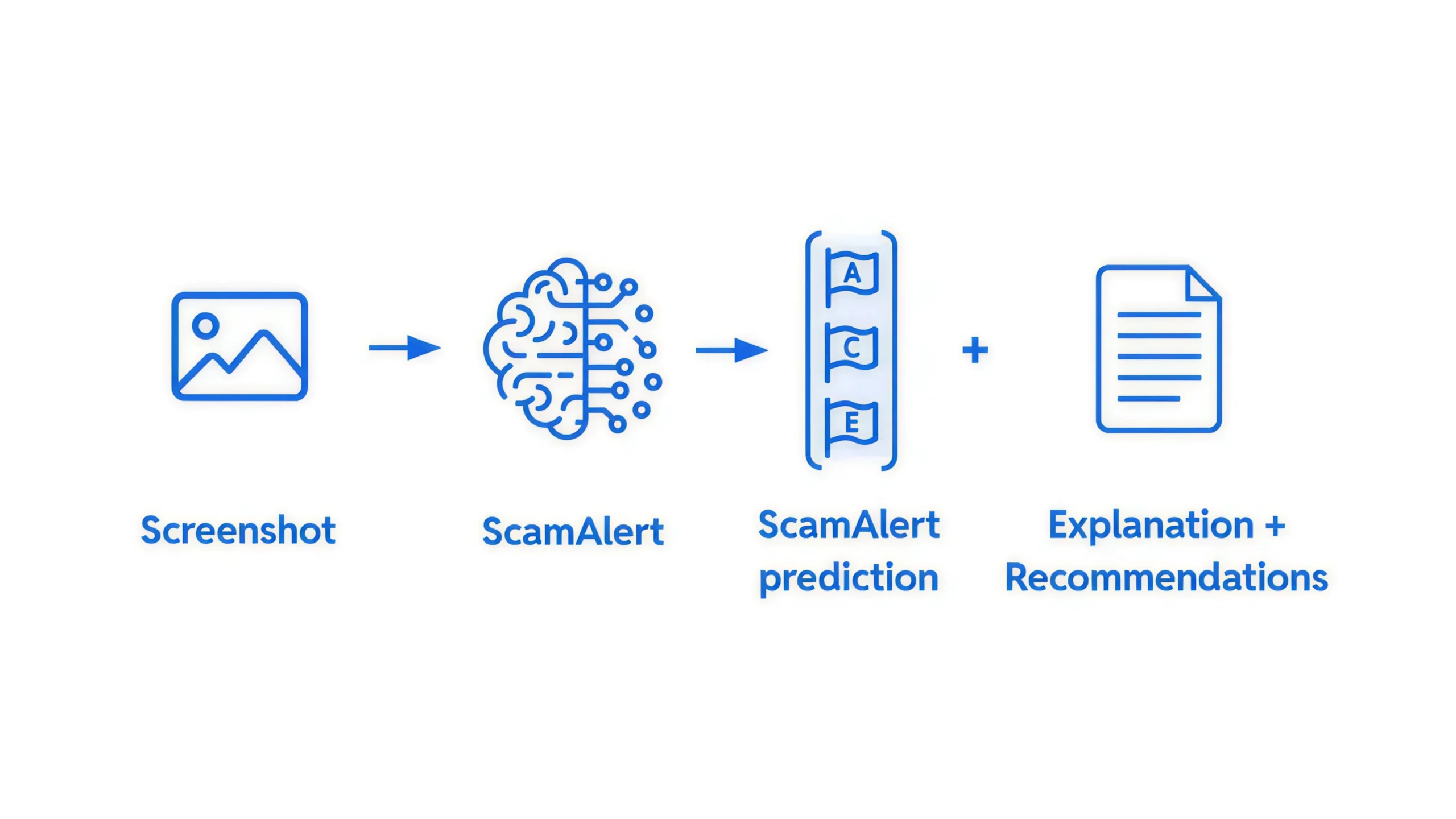

AI Research

The AI group has a mission of building the next-gen RiskOps AI to safeguard businesses and people from fraud and financial crime that is responsible and explainable by design.

Learn More

Data Visualization

The Data Visualization group aims to better elucidate complex data for Fraud Analysts & Data Scientists with insightful beautiful data experiences.

Learn More

Systems Research

The Systems Research group aims to enhance performance & reliability of the RiskOps Platform through innovation in a number of key areas.

Learn More

AI Research

The AI group has a mission of building the next-gen RiskOps AI to safeguard businesses and people from fraud and financial crime that is responsible and explainable by design.

Learn More

Data Visualization

The Data Visualization group aims to better elucidate complex data for Fraud Analysts & Data Scientists with insightful beautiful data experiences.

Learn More

Systems Research

The Systems Research group aims to enhance performance & reliability of the RiskOps Platform through innovation in a number of key areas.

Learn More

Page printed in 7 Mar 2026. Plase see https://research.feedzai.com for the latest version.