Publication

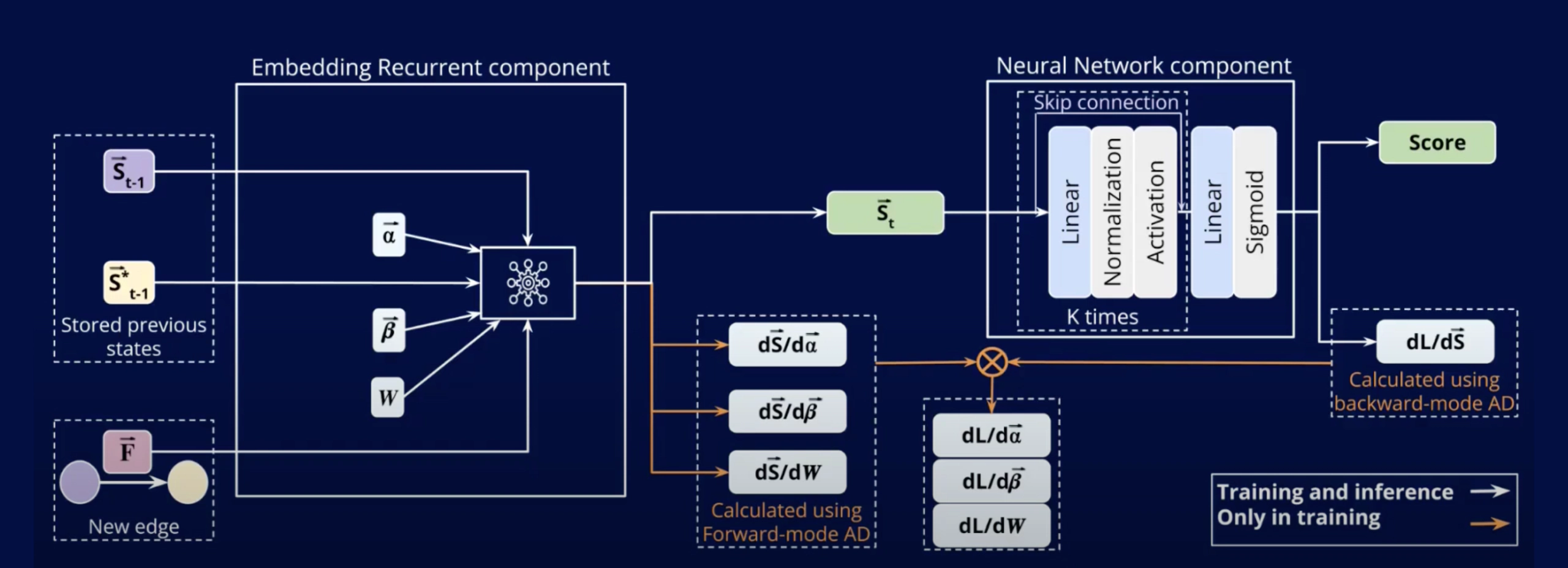

Deep-Graph-Sprints: Accelerated Representation Learning in Continuous-Time Dynamic Graphs

Published at TMLR - Transactions on Machine Learning Research

Abstract

Continuous-time dynamic graphs (CTDGs) are essential for modeling interconnected, evolving systems. Traditional methods for extracting knowledge from these graphs often depend on feature engineering or deep learning. Feature engineering is limited by the manual and time-intensive nature of crafting features, while deep learning approaches suffer from high inference latency, making them impractical for real-time applications. This paper introduces Deep-Graph-Sprints (DGS), a novel deep learning architecture designed for efficient representation learning on CTDGs with low-latency inference requirements. We benchmark DGS against state-of-the-art (SOTA) feature engineering and graph neural network methods using five diverse datasets. The results indicate that DGS achieves competitive performance while inference speed improves between 4x and 12x compared to other deep learning approaches on our benchmark datasets. Our method effectively bridges the gap between deep representation learning and low-latency application requirements for CTDGs.